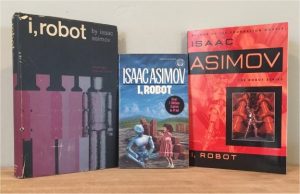

(The photo shows a 1969-era Science Fiction Book Club edition of a Doubleday hardcover, with the jacket copy claiming the book is “Long out of print and in great demand”; a 1984 mass market paperback from Del Rey; and the currently available 2008 trade paperback edition, also from Del Rey.)

I, ROBOT, published in 1950, is a collection of nine earlier-published stories gathered into a collection in an arrangement that has an historical progression, and with added interludes that expand the significance of a key character, Susan Calvin, a chief roboticist who develops the robots and explains their occasionally aberrant behavior. A key theme is that robots are constrained by the “Three Laws of Robotics” — summarized here at Wikipedia (briefly: robots can’t harm humans; must obey orders; must protect themselves, but each law overridden by any previous). And the background historical progression includes social and technological changes, among them the development of a hyperlight drive that enables what Asimov, in other stories, imagines as an interstellar and then galactic empire. The progression of stories ranges from the homespun to the grandiose, from the concerns of a young girl’s nursemaid, to the concerns of a species that suspects it’s being manipulated or controlled for its own good.

Asimov wrote these stories in the 1940s, in parallel with his “Foundation” stories, and apparently presumed the two series had nothing to do with each other. The book I, ROBOT collected these nine stories, with some revisions and some connecting material added as framing: so the book begins with a first-person reporter arriving to interview Susan Calvin, now 75, and listens to her stories about their development, and her belief that robots are “a cleaner and better breed than we are” p17 (page references – no check this – to the 2008 Del Rey trade paperback edition).

The first story, “Robbie,” first published as “Strange Playfellow” – the magazine editor changed it, and Asimov changed it back for the book) was Asimov’s 9th published story. It’s about a young girl, Gloria, whose parents have bought her a robot nursemaid, which she adores. [The original version of this story, which lacks references to the three laws and the cameo appearance by the unnamed Susan Calvin that we see in the book, is in the anthology THE GREAT SF STORIES Vol. 2.] But Mrs. Weston distrusts it, and the neighbors gossip about it, and so Mr. Weston reluctantly returns it. A visit to New York City (this is set in 1998) includes a trip to a robot museum, where the discarded Robbie now works on the line, and a fortuitous accident redeems Robbie and his position in Gloria’s life.

The story is notable for not being a puzzle story, as most of the later ones are. But it establishes a recurrent theme of these stories and others by Asimov (such as his first-published story, “Trends”) – the social resistance, often religiously inspired, to new technology and attempts at exploration.

The second story, “Runaround,” presents a classic puzzle scenario: a robot is behaving inexplicably, and can be understood only by a deep, situational, understanding of the Three Laws of Robotics. The story introduces a pair of wiseacre engineers, Powell and Donovan, who appear in several of these stories. We meet them here on Mercury, where a robot named Speedy, sent out to gather selenium from an open pool on the surface, hasn’t returned. In fact it’s circling the selenium pool as if in confusion or indecision. The solution involves an understanding of the relative priorities of the three laws; the orders given it, its need to protect itself, and its need to protect human beings.

The third story, “Reason,” originally published earlier than “Runaround,” is about a robot assembled on a space station far from Earth, who becomes aware, looks around, and refuses to believe the stories that Powell and Donovan tell about the Earth and the stars. Basically, he’s a creationist robot! He only believes the evidence of his immediate senses. I blogged about this in some detail several years ago — http://www.markrkelly.com/Blog/2015/09/21/rereading-isaac-asimov-part-3-reason-a-creationist-robot/. This and the last story are the most profound in the book.

The next few stories include a couple involving complex situations in which robot behavior seems inexplicable. In “Catch That Rabbit” a set of linked “multiple robots” on an asteroid work only when being watched by humans, and otherwise act crazily, going through wild gyrations of dance. In “Little Lost Robot” a special robot with a relaxed first law hides itself among ordinary robots after being told, by an angry engineer on a space station, to ‘get lost’. This latter story has the unfortunate, to contemporary ears, use of the words ‘boy’ to address the robot and ‘master’ for a robot to address a human. And in “Escape” a grand ‘thinking machine,’ built to assist the construction of a hyperdrive engine, confounds the engineers since the experience of hyperdrive causes human to too closely experience death…an effect presumably overcome in Asimov’s later galactic empire, when spaceship jumps of thousands of parsecs was routine.

“Liar!” on the other hand, the third written of all these stories after “Robbie” and “Reason,” has a heavy emotional component, and it’s all about Susan Calvin. (It’s worth noting that, looked at in order of publication, Asimov wrote his first robot story about a little girl’s nursemaid, then wrote the one about the creationist robot, and this one about the lying robot, the next year; and later he wrote the more intellectual puzzle stories about detailed conflicts among the laws.)

In “Liar!” the US Robots factory discovers they have accidentally built a mind-reading robot, RB or Herbie. They have to keep it a secret, lest they trigger more anti-robot resistance among the public. Susan Calvin interviews it, of course; US Robots need to understand how this happened. But then background soap opera situations overtake the story. Calvin has a crush on a younger roboticist, Ashe; a US Robots official, Bogert, desires to become director. And so—spoiler!—the robot Herbie tells them what they want to hear, that the things they desire will come true. Angry and embarrassing recriminations occur, until Susan realizes what is happening. Again, the three laws: the robot decided it couldn’t harm humans by distressing them with the truth. Of course, this is a quandary; surely telling people lies leads to more harm? Susan confronts the robot with this paradox—in a back and forth banter that the robot is unable to answer, until it *screams* and goes insane, never to speak again. It’s exactly like those situations in Star Trek where Kirk would argue a computer or robot into catatonia or destruction.

And the story relies on a sexist depiction of Susan Calvin as, being a professional woman, a homely biddy who can never hope to marry. It’s 1941.

The final two stories raise the stakes, or expand the scope, as the impact of robots is felt on the broad human society.

Before “Evidence” we have an interlude with the interviewer and Susan Calvin about how nationalism has come to an end and the world is divided into ‘Regions’ with a ‘Golden Age’ brought about by robots. The story concerns a politician, Stephen Byerley, who is accused of being a robot – he never eats in public, or sleeps. A rival politician wants to expose him; can he do so by forcing him to break one of the three laws? Not breaking the laws proves nothing; “the Rules of Robotics are the essential guiding principles of a good many of the world’s ethical systems” and so a human operating along these principles is simply a very good human.

It is Byerley who arranges a situation to prove himself not a robot. He stages a public speech, and a man from the crowd challenges Byerley to hit him, something a robot could never do, to harm a human. Byerley does. Slug him.

Of course there is another explanation, which Susan Calvin perceives.

It’s notable how often Asimov invokes ‘fundamentalists’ as the enemies of progress and exploration. Back in the 1940s! Perhaps some things never change. P186t:

It was what the Fundamentalists were waiting for. They were not a political party; they made pretense to no formal religion. Essentially they were those who had not adapted themselves to what had once been called the Atomic Age, in the days when atoms were a novelty. Actually, they were the Simple-Lifers, hungering after a life, which to those who lived it had probably appeared not so Simple, and who had not been, therefore, Simple-Lifers themselves.

Quite prescient!

In the interlude before the final story, we’re told that Byerley became Regional Co-ordinator, and then the first World Co-ordinator in 2044. By that time the Machines were running the world—standing devices the equivalent of robots, bound by the same laws. (It’s curious that Asimov imagined vast computers, ‘Machines,’ as being the successors to the individually built robots that we’ve seen in the earlier stories.)

The final story is “The Evitable Conflict,” first published in 1950, the same year the book I, Robot was published – presumably as the book was proposed, Asimov sat down to write a grand concluding story about how robots would affect human society.

Stephen Byerley, World Coordinator, meets with the now 70-year-old Susan Calvin to discuss why various economic inefficiencies are creeping into the system. The world has stabilized, because it’s run by robots and Machines, who have the first law built into them. There is no overproduction, no shortages, no more war. And yet, there are inefficiencies. Have the Machines been given the wrong data?

Byerley recounts his visits to each of the four ‘Regions’ on Earth.

- The Eastern Region, run by Ching Hso-lin in Tientsin, which has a problem of unemployment. (Note the emphasis on yeast; cf. the Lucky Starr Venus novel)

- The Tropic, in ‘Capitol City’; a shortage of labor

- The European, capital Geneva, where Madame Szegeczowska discusses the falling of production from the Almaden mercury mines

- And the Northern (a combination of North America and the former Soviet Union), capital Ottawa, with a Scots Vice-Co-ordinator, who says they can’t be fed false data

- And finally the entire Earth’s capital, New York. [of course]

There are several references to the Society for Humanity (p201, 208, 201) as being opposed to progress; p213.7 “They would be against mathematics or against the art of writing if they had lived at the appropriate time.”

Then on p215.3 it’s suggested that there are men in this society who are *ignoring* the dictates of the Machines. All the inefficiencies can be tied to them. “Men who feel themselves strong enough to decide for themselves what is best for themselves, and not just to be told what is best for others” p215.4

So what is the solution? Outlaw the Society? No. Calvin explains that the Machines will compensate, because their overriding drive is the first law…which becomes a law of humanity, not just an individual human.

And to do that, the machines must preserve themselves. Thus they take care of, ignore, the human elements that threaten them. They cannot reveal this to humanity, lest they ‘hurt our pride’ p218t.

So, what is best for humanity? Perhaps the style of earlier civilizations would make people happier. The machines will figure it out, without telling us. Agrarian, urbanization? Yes, humanity has lost control of its destiny; perhaps horrible, perhaps wonderful.

This is certainly the most profound of the robot stories, because it addresses big issues about human happiness and progress, and why those things are not necessarily best determined by the aggregate of individual humans.

Maybe humans don’t know what’s good for themselves. P223b

Stephen, how do we know what the ultimate good of humanity will entail? We haven’t at our disposal the infinite factors that the Machine has at its! Perhaps, to give you a not unfamiliar example, our entire technical civilization has created more unhappiness and misery than it has removed. Perhaps an agrarian or pastoral civilization, with less culture and less people would be better. If so, the Machines must move in that direction, preferably without telling us, since in our ignorant prejudices we only know that what we are used to, is good—and we would then fight change. Or perhaps a complete urbanization, or a completely caste-ridden society, or complete anarchy, is the answer. We don’t know. Only the Machines know, and they are going there and taking us with them.

This a brilliant set of insights, some 70 years ago, about the evolution of the human race, echoed by others, e.g. Harari, about how agriculture was a huge mistake, how we might have been happier living in other ways.

On the other hand– this premise assumes the notion that the machines are somehow infallible and wiser than actual human beings. This premise has not played out. As it’s turned out, it’s naïve to presume that all you have to do is feed computers a bunch of raw data and voila, they will answer any question you have.

\\

I’ve yet to reread Asimov’s two later robot novels, THE CAVES OF STEEL and THE NAKED SUN, so now I’m curious to see if in those books he followed up on these implications. Further out—he wrote several novels in the 1980s that managed to reconcile the robot series with the galactic empire series. Did these themes come up there? Beyond even those – Greg Bear, Gregory Benford, and David Brin wrote three follow-up Foundation novels, in the 1990s, to wrestle with Asimov’s themes. I’ll try to get to them too.

\\

Asimov’s conclusion reflects one of my provisional conclusions: that humans are happiest living in a community with a common set of beliefs, no matter how rational evaluation would show those beliefs to be in error or delusional. That to recognize reality, and humanity’s tiny place in it, can at best be an individual project.